The production of 847 Twins, the title track in the album Fan Art, is documented in four sections. The first section, Program, is a one-paragraph description of the music written for a concert booklet or album promotion. I share information and thoughts that may help listeners enjoy the music. The second section, Form, is for the creators who want to learn how I used electronic sounds in composition. The third section, Code, is for the technologists who want to learn how I designed the piece in SuperCollider, a code-based audio app. Links to the code are available here. The last part, Anecdote, has extra narrative relevant to 847 Twins but is optional to enjoy the piece.

If preferred, read this article in PDF format.

Program

847 Twins is a two-movement piece based on harmonic progressions of Prelude & Fugue in C Minor by J.S. Bach. An electronic remake of Bach is a well-known practice pioneered by Wendy Carlos and Pierre Schaeffer (Switched-On Bach & Bilude). I learned so much from reading and listening to their works. J.S. Bach is also my hero composer. Therefore, it seemed appropriate to dedicate a song to my musical cornerstones in an album about fandom.

Listen to the tracks linked below before reading the next sections.

The tracks are available on other major platforms at https://noremixes.com/nore048/

Form

Mvt I. Pluck

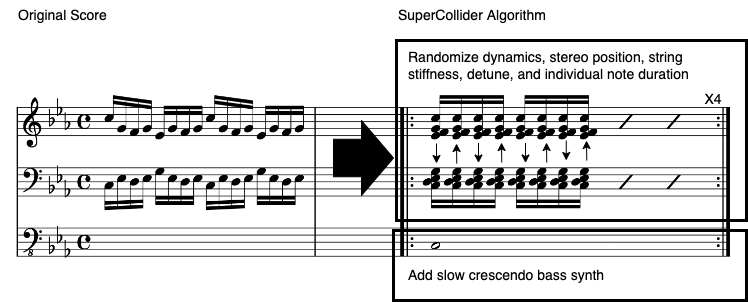

Pluck and Blip, the two movements of 847 Twins, algorithms written in SuperCollider use the harmonic progression of the Prelude in BWV 847. The downloadable code, 847_Pluck.scd, generates randomized voicing patterns played by a guitar-like synth. Below is a step-by-step explanation of how the composition process.

- Design an electronic string instrument. Each note of this instrument is detuned at a different ratio every time the string is “plucked.” The note’s duration, dynamic, string stiffness, and pan position also vary randomly.

- Using the instrument in Step 1, strum a chord with notes at a measure in BWV 847. Unlike a guitar, a strum of a chord can have multiple pan, accents, and note durations due to the randomization in Step 1.

- Each measure of BWV 847 is played four times before advancing to the next measure.

- Add a bass part with gradually increasing loudness. It plays the lowest note in the corresponding measure.

- Add the intro and the outro for a better form. They are not quoted from BWV 847.

In short, the first movement of 847 Twins is a reinterpretation of BWV 847 featuring an imaginary string instrument and a synth bass. I loved how Bach created exciting music with a predictable rhythmic pattern. The key was harmony and voicings. I wanted to emphasize that aspect with an additional layer of dynamics articulations in Pluck. The added bass line, which imitates the “left hand” of basso continuo, fills in the low-frequency spectrum of the piece. The bass part is best experienced with a headphone or a subwoofer.

Mvt II. Blip

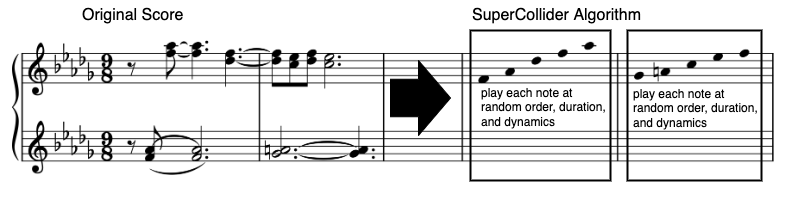

The first movement lacked elements of counterpoint, so I tried to make an electronic polyphony in the second movement. In Blip, each measure has 3-6 parts playing different phrases derived from a measure in BWV 847. The phrase shape, the number of voices, and articulation are determined randomly at every measure and create a disjunct yet relative form. Schaeffer’s Bilude explores this idea by combining piano performance and recorded sounds.

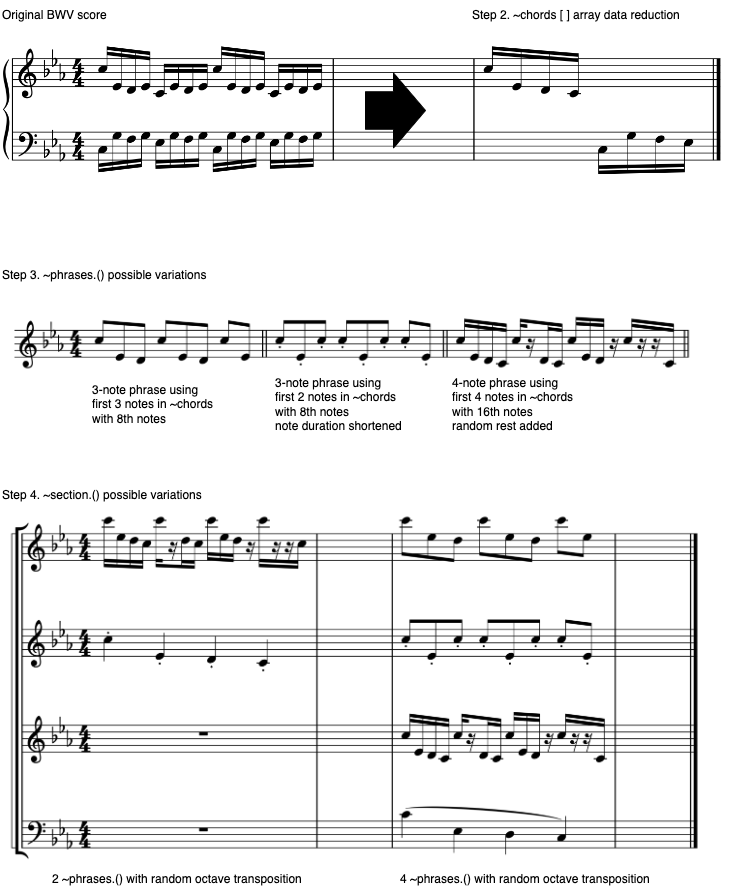

Below is my process of creating a random phrase generator. Please run 847_Blip.scd to hear the piece.

- Create a list of pitch sets by reducing repeating notes in each measure of BVW 847.

- Make three different synth sounds.

- Make a phrase generator that uses the list in Step 1 and synths from Step 2. The instrument choices, phrase length, note subdivisions, and articulations are randomized. The SuperCollider code also has the option to generate a rhythmic variation (i.e., insert rest instead of a note).

- Make a polyphony generator that spawns the phrase generator described in Step 3. The number of polyphonic voices and their octave transpositions are random.

- Play and record Step 4 twice. Then, import the tracks to a DAW. Insert a reverb plugin on one track. The reverb should be 100% wet.

The algorithm described above creates different timbres, polyphonic patterns, and the number of voicings at every measure. Furthermore, every rendition of the SuperCollider code makes a unique version of Blip. One measure can be a duet of two-note phrases, and the following measure can be an octet of eight phrases played in a four-octave range. The room sound created by the DAW reverb plugin doesn’t reflect the source, but it sounds similar enough to be heard as part of a whole.

Code

Mvt I. Pluck

The SuperCollider file for Pluck consists of seven parts. Please download and use 847_Pluck_Analysis.scd to hear and modify each part. Make sure to run the line s.options.memSize=8192*16 to allocate enough memory.

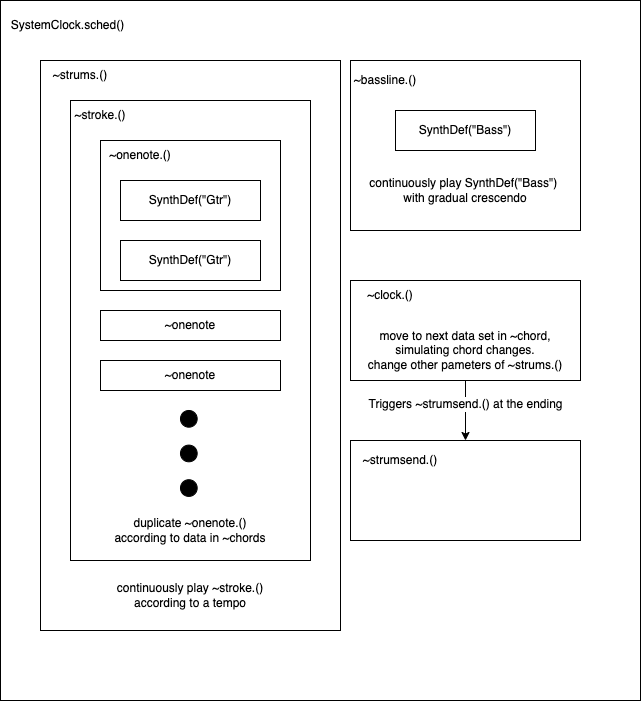

- SynthDefs: SynthDef(“Gtr”) uses a Karplus-Strong physical model with controllable pan, frequency, stiffness, amplitude, and duration. SynthDef(“Bass”) makes a sinusoid tone with a percussive amplitude envelope. The UGen Lag.kr smoothens the sharp transient of the amplitude envelope.

- ~onenote: this function uses two SynthDef(“Gtr”) to create a detuned note. The amount of detuning is randomized along with other parameters of the SynthDef.

- ~stroke: this function creates instances of ~onenote with pitches specified in the ~chords array. ~chords is a collection of all the notes in the Bach Prelude, categorized and indexed by measure number. The order of the notes in a measure is random. ~stroke plays the chord in sequence or reverse to simulate a guitar’s up and down stroke motions.

- ~strums: this function continuously triggers ~stroke. The global variable ~pulse determines the tempo. ~strumsend function is used once for the ending.

- ~clock: this function changes the chord progression at time intervals set by the global variable ~mdur. It also changes the parameters of ~strums by altering the values of global variables ~mm, ~accent, ~volume, ~notedur, and ~stiff. Note that both ~strums and ~clock functions must run simultaneously for a correct chord progression.

- ~bassline: this function plays SynthDef(“Bass”) a few seconds after the start of the piece. It uses the if condition to change the rhythmic pattern. The line pitch=~chords.at(count).sort.at(0) picks the lowest note of each measure as a bass note.

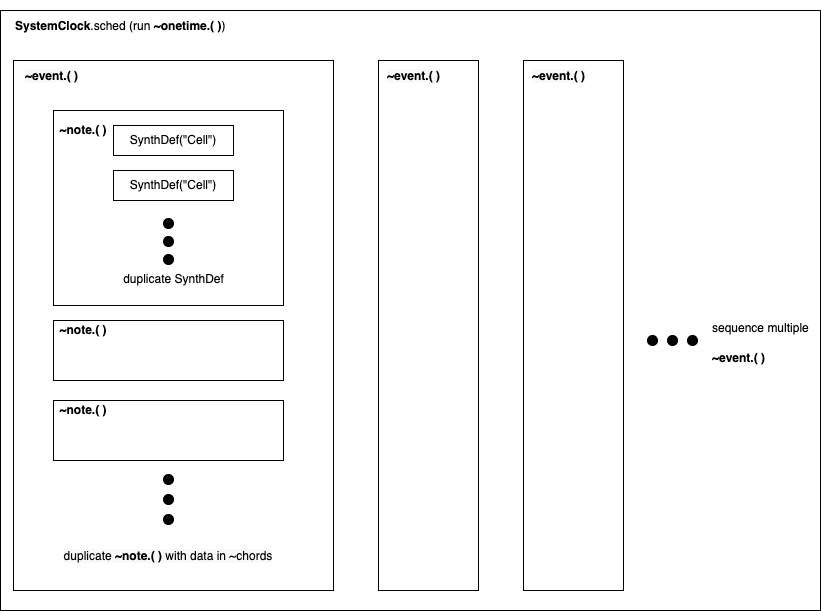

- SystemClock: this scheduler syncs ~strums, ~clock, and ~bassline to play a version of Pluck. Every rendition of SystemClock will make a new variation of the track.

Mvt II. Blip

The SuperCollider file for Blip consists of four interconnected parts. Please download and run 847_Blip_Analysis.scd to hear each part.

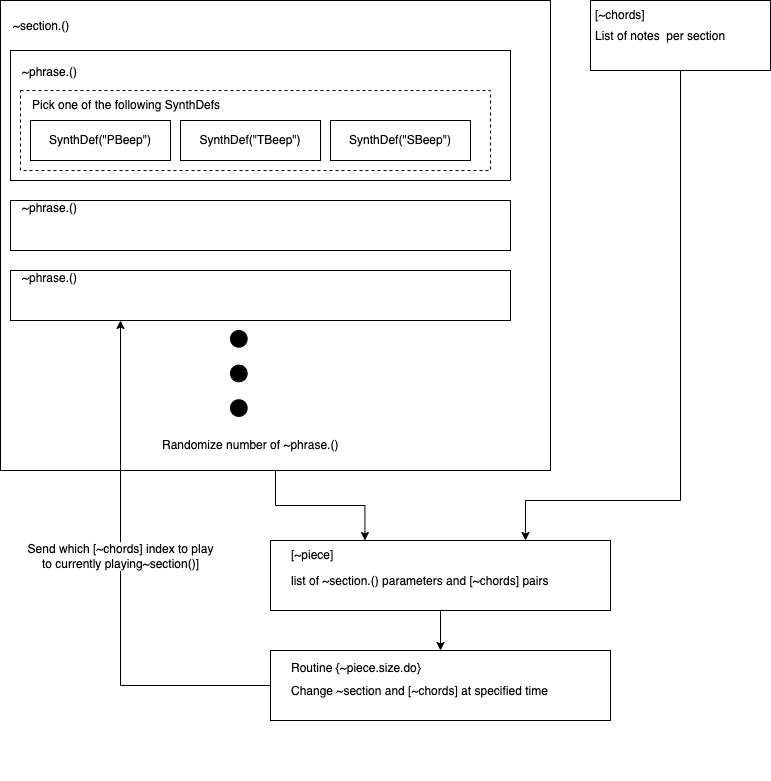

- SynthDefs: The three SynthDefs, PBeep, TBeep, and SBeep, are all slightly detuned percussive instruments featuring a classic oscillator waveform, such as sine, triangle, and pulsewidth.

- ~phrase: this function creates a short melodic pattern based on pitch sets received from global variable ~arp. It controls which SynthDef to use, amplitude, phrase length, note duration, and transposition. The last two arguments activate or deactivate that random rhythm generation and arpeggio pattern variation.

- ~section: this function duplicates ~phrase. The number of ~phrase and octave transpositions are randomized. The function also makes further variations on amplitude, note duration, and panning.

- The Routine in the last section uses the ~piece array as a cue list with details on when and how to trigger the ~section. The array ~chords is a list of all the notes in corresponding measures of the Bach Prelude. The Routine also sends a changing pitch set from ~chords to ~phrase via the global variable ~arp.

Anecdote

847 Twins does not use the Adagio section of the Prelude and Fugue. When composing the first movement, I could not transition from a constant 16th-note drive to a free and improvisational ending. I tried to address this incompleteness by writing a complementary movement, Blip, but it did not work out. I made a satisfying solution six months after completing 847 Twins by incorporating an instrument I could improvise aptly and freely. Nim6tet, the sixth track in Fan Art, has six layers of no-input mixer improvisation guided by the chord progressions of the Adagio section. It shamelessly shows off no-input mixer sounds I can not create with other instruments.

It took many attempts in the period of 1.5 years to finish three tracks about the first half of BWV847. The electronic interpretation of the Fugue part is a puzzle yet to be solved.

More Analysis and Tutorials

- End Credits – Brief Analysis: analysis of a piece in the same format as the above.

- Computer Music Practice Examples (CMPE) – tutorials on using computer music technology for instrument design, composition, performance, and presentation.

Updated on 4/13/2023