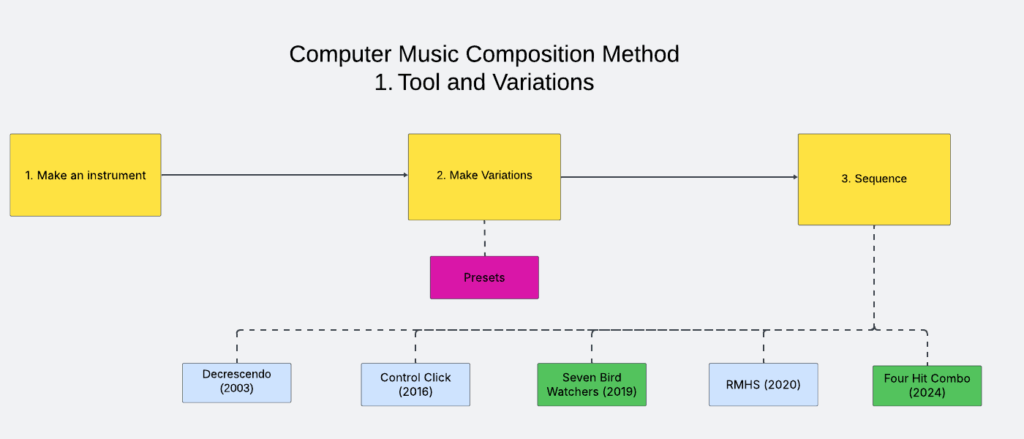

Since 2009, I have been presenting a solo set of live electronic music. Among the many electronic performance techniques, I specialize in creating electronic sounds on stage without pre-recorded samples. I use a combination of digital effect processors coded with SuperCollider to improvise a uniquely electronic soundscape in concerts and recordings. For more than a decade, I have marketed myself as an expert in that specific style. It is represented as a yellow rectangle in the diagram below.

The categorization is not meaningful to anyone else, but it was a useful research goal for me in the 2010s. I share three representative pieces of my solo electronic improvisation for listening and analysis purposes.

Three Examples

100 Strange Sounds (2012-2014) is a set of one hundred short video recordings featuring my live electronic music techniques. Each piece pairs a sound-making object with my SuperCollider code that processes its sound. I invite viewers to notice and enjoy the unexpected relationship between what they see and what they hear. For example, the sound of a cabbage becomes something else with a bunch of effect processors in 100 Strange Sounds #77.

Large Intestine (2013) is a piece I made after 100 Strange Sounds #42. As described in the blog on style analysis, the no-input mixer improvisation enhanced with SuperCollider has been my favorite electronic instrument for more than a decade. Large Intestine, as the title suggests, epitomizes my interest in noise, digital signal processing, and improvisation. I plan to play this work in as many concerts as possible in the future.

Touch (2014) is my kitchen-sink piece that pairs multiple sound objects with multiple effects. It’s a summary of 100 Strange Sounds, in which I bring random objects on stage and improvise the combination and sequence of sounds. The piece opened many doors to career opportunities in the 2010s as an electronic music improviser. The techniques and technologies I learned in performing and refining Touch became a source for future non-improvisational compositions for electronic ensembles.

Technology

All three pieces mentioned above use a variation of a single SuperCollider patch, available for download at this link. And this linked PDF explains the hardware and software setup to perform the pieces (warning: it is a little outdated).

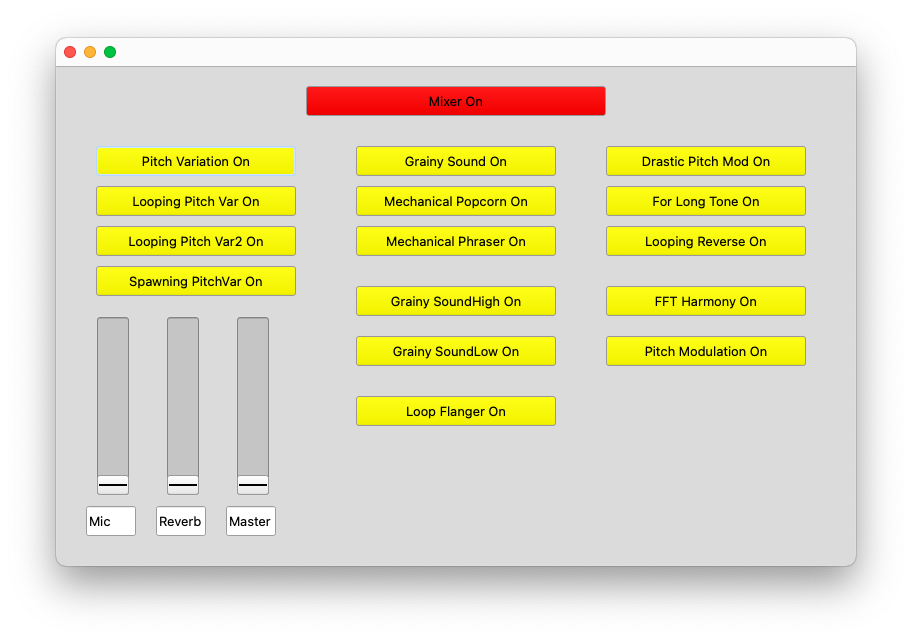

When I run the patch, it creates a GUI with multiple buttons that trigger customized effects. I control the number and timing of the effects’ on/off states with a mouse click – No MIDI controllers or control surfaces. A few clicks, probably unnoticed by the audience, are enough because I wanted the listeners to focus on the interaction I have with the non-electronic objects on the stage.

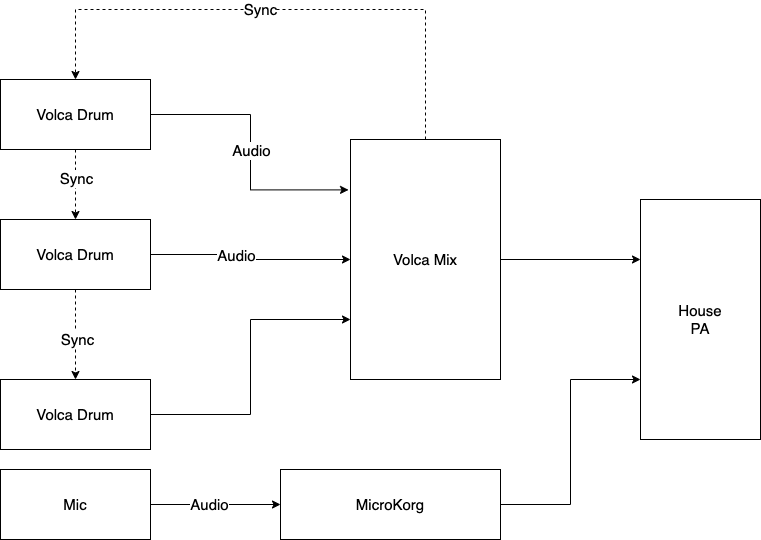

As for the hardware, I use a couple of microphones for Touch, one audio interface, and a laptop. This article explains the gear I used over the past 11 years.

Technique

Like other improvisations, the key technique in performing solo live electronic music is listening. I listen for variations that the computer part adds to the acoustic instruments, then respond with another instrument or effects. Because I cannot play a scale or harmony with the instrument (like cabbage), the listen-and-react decisions are often non-musical and raw. “The current sound is long, so I’ll play short sounds next.” “I will go from a simple to a complex texture.” “The sound is very high in pitch. I’ll complement it with a very low rumble.” I also ask questions and try to come up with the best answer on stage. “What happens if I granularize the chattering teeth sound?” “The plastic block sounds harsh. Can I make it harsher?” “What is common between a slinky and a coin sound?”

Free improvisation focusing on reactions and questions is fun, but it can quickly lose control of the length and form. So I plan a specific gesture or sound combination for transitions. The Extension and Connection blog linked earlier has such an example in Touch.

Annecdote

More than fifteen years of experience in improvising with live electronics forms the foundation of my musicianship. I identified myself as a composer after earning a PhD in composition in 2008, but it did not lead to a gig or collaborations when I moved to Philadelphia for my first job as a music technology professor. The dire situation led me to develop a solo set I can prepare and present quickly in any situation. The strategic change, fortunately, worked, giving me ample opportunity to refine my performance and improvisation techniques.

These days, I am comfortable identifying myself as a composer-performer of electronic music. My sound may not be fresh or cutting-edge at this point, but I think I have a bit more to contribute to the current solo setup. Perhaps the contribution is a documentation and theorization. Perhaps it is just one more new piece!

More electronic music composition/performance/practice articles are found at the Computer Music Practice project.