I often prepare a set for solo show opportunities. A set is a performance practice of playing multiple pieces without significant pauses (i.e., no “set changes”). It is often long (30+ minutes), and the works presented within have a common theme or instrumentation. The ability to perform a solo set is helpful, if not essential, to electronic music performers in getting gigs and collaborative projects. A DJ set at music festivals is a good example of a set performance.

I played a set consisting of seven original compositions at the 16th Strange Beautiful Music (SBM) Festival in September 2023. I will use the recording of this particular set to show how I organize a 40-minute set. I hope the readers get a macro and micro-level insight into an electronic set performance, especially when read together with my analysis of solo set gears.

I go through four preparation steps for a set performance.

- Decide pieces

- Decide the order

- Practice transitions

- Practice sound check

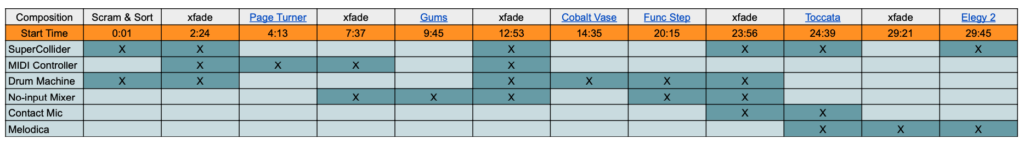

I will explain the details of each step in the subsequent sections. Please refer to the chart below to see the overall timeline of the SBM set. The chart lists the estimated starting time and instrument used in each section.

Link to Google Sheet version of the chart

Decide Pieces

The selection of pieces depends on external factors I cannot control. Examples are the total duration decided by the organizer, sound check time, and the venue’s equipment. Once I learn the external factors, I decide which pieces to include in a set. I was invited to perform for 40 minutes for the SBM at Andy Arts Center’s Hanger. I had one hour of tech time with an excellent audiovisual team. Given this information, I decided to play the following pieces.

- Scramble and Sort (2023) – for computer and drum machine

- Page Turner’s Agony (2021) for computer and MIDI controller

- Gums (2013) – for no-input mixer

- Cobalt Vase (2019) – for drum machine

- Func Step Mode (2019) – for no-input mixer and drum machine

- Toccata (2009) – for computer and contact mic’ed objects

- Elegy No 2 (2017) – for computer and melodica

Each piece in the set features uniquely electronic sound and instrument. All pieces involve improvisation, so the audience hears an event-specific version of the piece. The SMB performance also included a world premiere of Scramble and Sort – Adding an artistic risk prevents me from potential practice fatigue.

Decide the Order

The order of the pieces in a set should be carefully tweaked for seamless transitions between the pieces. A well-thought-out sequence of compositions keeps the audience engaged as well. My theme of the SBM set is to show multiple electronic instruments in different contexts, so the order of revealing different instruments and styles was a priority. I opened the set with Scramble and Sort as an appetizer – music with an easy-to-digest rhythm and the familiar sounds of a drum machine. The following two pieces, Page Turner’s Agony and Gums, had more abstract and timbre-based electronic sounds, featuring a MIDI controller and no-input mixer. Then, as a main course, Cobalt Vase, Func Step Mode, and Toccata feature an unconventional combination of familiar instruments. The sounds of these pieces were most aggressive and noisiest- they were not appropriate as opening pieces. As a palate cleanser, I ended the set with Elegy No. 2, a slow and minimalistic piece featuring melodica.

The visual elements are also a factor in deciding the order. One person playing an instrument with relatively little flexibility for an extended period is not exciting by default. I try to improve this by introducing new instruments for each piece. In the SBM set, a drum machine, no-input mixer, found objects on contact mic, and melodica were sequentially introduced. The above chart shows the order and amount of appearance of the instruments for a better insight.

Practice Transitions

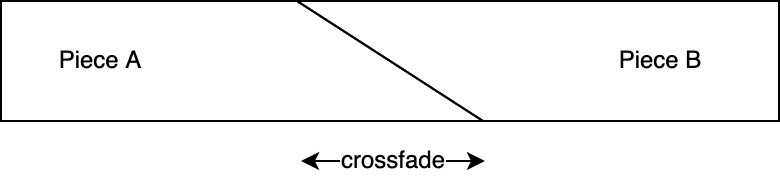

Smoothly connecting one piece’s ending to the next piece’s beginning is a concept I do not consider when composing a work. But I am responsible for making the transition musically satisfying in a set. I want the audience to enjoy the process of timbral and stylistic changes in gradual motion.

In music production, a crossfade function gradually transforms one sound into another (it is the equivalent of the cross dissolve in movie editors). Most of my practice for the set focuses on devising and practicing live crossfades. Because the order of the pieces could be unique to each gig, the crossfades are unique to each event. I consider crossfades of significant length (2-3m), as heard five times in the SBM set, mini event-specific compositions.

Practice Sound Check

Setting up and striking the gear should be part of the practice and preparation. A solo set often needs a suitcase full of cables and instruments. There is no time to think about signal flow during soundcheck. It takes me a few hours to test all the gear and figure out the optimal configuration for every gig. When the configuration is finalized, I practice setting up and tearing down the equipment. I aim to be ready for the sound check within 30 minutes of arrival at the venue.

Being as self-contained as possible in terms of gear increases efficiency. I packed all the gear and bought a folding table for the SBM set. The less time I spend on finding the right table and setting up the gear, the more time I can use during the allotted sound check to troubleshoot and tweak the sound for the room. For the SBM performance, I forgot the box of toys I use for Toccata at home. So, I finished the sound check early and picked up rocks, bolts, and other objects in the venue’s parking lot. I hope no one noticed my mild panic before and during the permanence.

Outro

Organizing and presenting a set is a skill that helped my career as an electronic music performer. A well-practiced set is suitable for tours, guest lectures, and festival performances for its efficiency and flexibility. Many collaborative opportunities came from meeting musicians and dancers from presenting in this format. Audiences also experience music they heard from phones and computers in more intimate and focused contexts. As for artistic growth, curating a set allows me to improve and reimagine the existing works. Every set performance is a practice for a future show. Sometimes, transitions become seeds for new compositions.